Twenty Talking Points for The Book of Why

A few months before publication, Basic Books asked Judea and me if we could write down some talking points that their salespeople could use when pitching our book to customers (book stores, mostly). This was a very interesting exercise, and it made me think: Maybe we should have written the talking points first, and the book second!

In any event, here are my talking points (these were influenced by Judea but not entirely identical to his talking points). For the busy reader, you may consider this the Cliffs Notes version of our book. Needless to say, the book has a lot more information on each of these points.

- “Why” questions are questions about causes and effects.

- The human brain is the greatest technology ever invented for answering such questions.

- Big Data, by itself, cannot answer even simple causal questions.

- Deep learning, despite its successes, does not address causality.

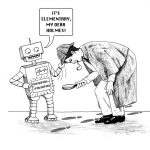

- If we want to create “strong AI,” we must emulate the way that humans think about causal processes.

- Key concept: The Ladder of Causation. 1) Association. 2) Intervention. 3) Counterfactuals. 1) is the level at which animals and most machine learning programs operate. 2) is the level in which we understand how our actions affect the environment. 3) is the level at which we can ask “what if” questions, which are essential for understanding “Why?”

- Statistics has long been blind to causality and has deliberately avoided causal language.

- Because science depends on statistics to tell us how to analyze data, scientists have been indoctrinated with the taboo against causation.

- An exception to the taboo is the randomized controlled trial (RCT), which has been accepted as a “gold standard” for discussing causation. However, in many cases RCTs are impractical or unethical.

- Real-world consequences: Does smoking cause cancer? Scientists struggled greatly in the 1950s and 1960s to find any methodology that would address the question. Tobacco companies portrayed their lack of methodology as a lack of certainty.

- Judea and others have developed a language and methodology for: a) expressing causal relationships; b) framing causal queries; c) answering causal queries or determining that they cannot be answered. More simply: he has given scientists tools for extracting causation from correlation.

- Our methods challenge the supremacy of randomized controlled trials; in fact they explain why the RCT works and what to do in cases where an RCT is impossible.

- A key tool is the causal diagram. Learning how to use causal diagrams is a mechanical skill that is no more difficult than reading a map of one-way streets.

- Using tools of causal reasoning, it is conceivable that we could have answered much more quickly whether smoking causes cancer.

- Some fun and controversial paradoxes, such as the Monty Hall Problem and Simpson’s Paradox, can be resolved easily with causal diagrams.

- Instead of dismissing such paradoxes, we should view them as “canaries in the coal mine” that reveal flaws in our conventional thought patterns about probabilities and causes.

- In the future, we can address an endless variety of causal questions. Case studies: a) Did manmade global warming cause this heat wave? b) Did this university discriminate against women in admissions? c) Does this gene cause lung cancer directly or by predisposing people to smoke more? d) What is the effect of a job training program on salary? e) What caused an autonomous vehicle to take the wrong action?

- The algorithms Judea and his colleagues have developed can be the core of a “causal inference engine” for a future AI.

- The causal inference engine will allow the computer to talk with humans using our native cause-effect vocabulary.

- AI’s can and should be taught causal reasoning as a prerequisite to ethical behavior and beneficial collaboration with humans.