So far I have looked at three games from the AlphaZero-Stockfish match: #5, #9, and #10 from the ten games provided in the arXiv preprint. All three are amazingly similar, and at the same time they are amazingly unlike almost any other game I’ve ever seen. In each case AlphaZero won by sacrificing a piece for compensation that didn’t fully emerge until at least 15 or 20 moves later.

To me this can’t be an accident. It points to a fundamental difference between the alpha-beta search paradigm used by almost all existing chess engines and the deep-learning paradigm used by AlphaZero. The former approach is much more intuitive: you look at each of your moves, then the best responses of the opponent, then your best responses to those responses, and so on. You always need some way of evaluating the positions at the end of the search tree so you can say which is “best.” For that purpose, previous chess engines have leveraged all sorts of knowledge that the human engineers have programmed into them (with the advice of grandmasters).

This approach relies on human knowledge and heuristics, which could be an advantage or a disadvantage, depending on how accurate that knowledge is. Worse, this method always leads to a “horizon effect,” because the machine can’t take into account anything that will happen after the point where it chooses to truncate the search tree. In early chess computers the horizon effect was really severe. In more recent programs, the horizon is pushed back to the point where it rarely affects games with humans. But playing against AlphaZero, Stockfish ran into the horizon effect big-time. It could not see things that were going to happen 20 to 25 moves ahead.

How does AlphaZero avoid the horizon effect? To evaluate a position, it simply plays hundreds of random games from that position. To you or me this may seem like a crazy idea, but actually it makes a certain amount of sense. In some positions there may be only one “correct” way for White to win — but often in these positions Black is visibly in trouble anyway. If you give the position to two grandmasters, they might play the correct line and White would win. If you give it to two 2200 players, they may play almost correctly and White will still win. If you give the position to two 1400 players, they will make mistakes right and left — but White will still win. So the point is that even incorrect play will still give you a sense of who is winning, as long as the mistakes are equally distributed on both sides.

Because AlphaZero is playing complete games, albeit random and imperfect ones, it is not susceptible to the horizon effect. Consider, for example, blockaded or “fortress” positions. Machines that use alpha-beta search have real trouble evaluating these. Even good chess engines will mistakenly conclude that White is two or three pawns up, while humans will look at the position conceptually and say, “White has no way to break through.”

Give AlphaZero one of these same blockaded positions, and it will see that in the blockaded position White only wins 1 percent of the games and 99 percent end in draws. Therefore, it will avoid the blockaded position. Presto, one of the weaknesses of chess computers goes away.

Even more impressively, AlphaZero was able to evaluate sacrifices that were many moves deep. It did not do this by exact calculation. No doubt it does some brute force analysis, but way less than Stockfish. According to the paper, it searches 80,000 positions per second, compared to 70 million for Stockfish. Instead, it plays a bunch of random games with the sacrifice and sees that White wins 80 percent of them. As far as AlphaZero is concerned, that is good enough evidence that the sacrifice is a really good move. In a way we’re reversing the logic from above. If we see that White usually wins the position if it’s played by weaker players, chances are that White still wins if it’s played by grandmasters or superhuman chess computers.

There is one other conceptual difference between the way that AlphaZero and other engines do business, which I think is really important. Engines provide an assessment in terms of material equivalent; e.g., White is 1.5 pawns ahead. AlphaZero evaluates the position in terms of its expected winning percentage. If White wins 40 percent of the random games, draws 50 percent, and loses 10 percent, then the evaluation is 0.65 points, the expected number of points that White will score per game. (0.65 = 1 x 0.40 + ½ x 0.50) This is much more logical than evaluating the position in terms of pawns; after all, we play chess to win games, not to win pawns. Nevertheless, no previous machine or human has demonstrated a convincing ability to assess a position this way. Now AlphaZero has.

Once you grasp this point, you understand that AlphaZero’s approach is holistic, rather than reductionistic. It doesn’t reach evaluations by breaking the position down into material, space pawn structure, etc. Those are human heuristics, suitable for human brains. AlphaZero may not even know what a doubled pawn is! Instead AlphaZero lets the computer evolve its own heuristics, suitable for a silicon brain.

Okay, I’ve babbled on too long here. Let’s see how these considerations work out in a real game. This was game #9 of the ten games released publicly by the AlphaZero team.

AlphaZero – Stockfish

1. d4 e6 2. e4 d5 3. Nc3 Nf6 4. e6 Nfd7 5. f4 c5 6. Nf3 cd

Position after 6. … cd. White to move.

Position after 6. … cd. White to move.

FEN: rnbqkb1r/pp1n1ppp/4p3/3pP3/3p1P2/2N2N2/PPP3PP/R1BQKB1R w KQkq – 0 7

Stop! Already this is an interesting moment. The conspiracy theorists and Luddites on the Internet, who refused to believe that AlphaZero is for real, pointed to this kind of move as evidence that the competition was rigged. 6. … cd is a side line, and pretty clearly inferior to 6. … Nc6 or 6. … a6. Why would Stockfish play such a move?

Well, most chess engines vary their openings to some extent, partly to make it more interesting for their human opponents but also because an engine that puts all of its eggs into one opening basket is vulnerable to new discoveries in that opening. Remember, also, that AlphaZero and Stockfish played a 100-game match. The AlphaZero team cherry-picked the best ten of its 28 victories to show us. It stands to reason that in those games Stockfish must have done something wrong. So it’s not that the match was rigged; we’re just seeing a very biased selection of games from the match.

Also, consider the absurdity of the Luddites’ argument. They are saying the match was rigged because Stockfish didn’t play the book move. But AlphaZero, its opponent, doesn’t even have a book! It developed all of its opening knowledge organically, without human input.

7. Nb5! …

This is why Black’s move was not accurate. In the main lines White doesn’t get to recapture on d4 with this knight.

7. … Bb4+ 8. Bd2 Bc5 9. b4! Be7 10. Nbxd4 Nc6 11. c3 a5 12. b5 Nxd4 13. cd Nb6 14. a4 Nc4 15. Bd3 …

Position after 15. Bd3. Black to move.

Position after 15. Bd3. Black to move.

FEN: r1bqk2r/1p2bppp/4p3/pP1pP3/P1nP1P2/3B1N2/3B2PP/R2QK2R b KQkq – 0 15

This is pretty much a textbook case of a French Defense gone wrong for Black. White’s center is rock-solid and unassailable, Black’s bad QB has absolutely no scope, and Black’s king cannot castle safely in either direction. That being said, it’s not necessarily easy for White to win. Black’s king is actually kind of safe in the center because of the blocked pawn formation.

Now something interesting happens.

15. … Nxd2 16. Kxd2! Bd7 17. Ke3! …

Amazing! AlphaZero recognizes that there is absolutely no danger to its king, which is safer here than it would be on the queenside and less in the way than it would be on the kingside. It’s fun to see the machine playing so unconventionally, and it teaches us humans to think outside of the box.

17. … b6 18. g4 h5 19. Qg1! …

More unconventional play. Most humans, including me, would probably play 19. h3 without thinking.

19. … hg

Stockfish could not defend with 19. … g6 because 20. gh Rxh5? runs into 21. Bxg6! and 20. gh gh 21. Qg7 would be just sad.

20. Qxg4 Bf8 21. h4 Qe7 22. Rhc1 g6 23. Rc2 Kd8 24. Rac1 Qe8

FEN: r2kqb1r/3b1p2/1p2p1p1/pP1pP3/P2P1PQP/3BKN2/2R5/2R5 w – – 0 25

A moment of humor amidst the battle. Black’s king and queen do a pas de deux and trade places.

Black has nothing to gain by trying to play actively with 24. … Qa3, because 25. Ng5 Rg8 26. Nxf7+ Ke8 27. Rc3 Qxa4 28. Bxg6 is just too strong. So Stockfish doubles down on ultra-passivity. How is White going to break through?

25. Rc7 Rc8 26. Rxc8+ Bxc8 27. Rc6 Bb7 28. Rc2 Kd7 29. Ng5 Be7?!

Evidently this was a mistake, but one we can be grateful for because it allows AlphaZero to display its chess ultra-wizardry.

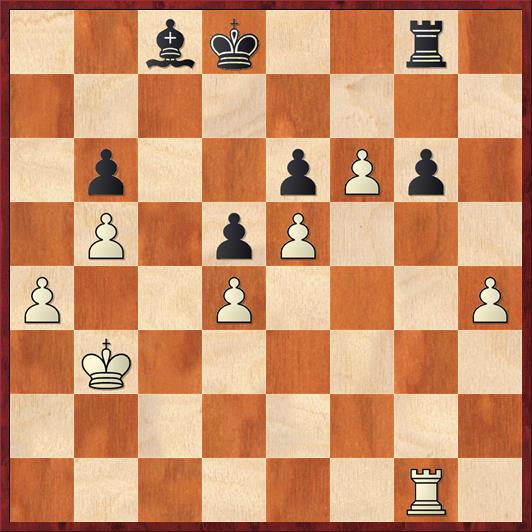

Position after 29. … Be7. White to move.

Position after 29. … Be7. White to move.

FEN: 4q2r/1b1kbp2/1p2p1p1/pP1pP1N1/P2P1PQP/3BK3/2R5/8 w – – 0 30

30. Bxg6!! …

The phrase “bolt from the blue” scarcely does justice to AlphaZero’s move. A better analogy comes from Douglas Adams’ Hitchhiker’s Guide to the Galaxy:

When you’re cruising down the road in the fast lane and you lazily sail past a few hard-driving cars and are feeling pretty pleased with yourself and then accidentally change down from fourth to first instead of third thus making your engine leap out of your hood in a rather ugly mess, it tends to throw you off your stride in much the same way that this move threw Stockfish.

(I changed the last three words of the quote.)

There are two parts to this piece sacrifice. The first is the tactical part, which a good grandmaster could work out on a good day, and that ensures that White doesn’t lose. Of course, Stockfish surely saw the tactical part, which is only about four or five moves deep. But it wasn’t the least bit afraid because it saw the position as dead even after those four or five moves … and for many moves after that.

On the other hand, AlphaZero, with its infinite depth of search, sees that victory is almost inevitable for White. Conceptually, the point is this: in the endgame, Black’s bishop is literally worth just a pawn. Once you truly believe this, then you understand that the material situation cannot be any worse than equal for White, and on top of that all of his pieces (especially his king) are better than Black’s.

Out of curiosity I gave this position to my copy of Rybka. After 16 ply (8 moves) it evaluates the position at 0.00. I re-started the analysis at that point, with the position on move 37. Starting from that position, at 14 ply it still sees the position as even (0.01 in favor of Black), but finally at 15 ply it gives White a 0.40-pawn edge. So from Rybka’s point of view, AlphaZero’s piece sacrifice finally starts to make sense 31 ply (15 moves) after the diagrammed position. I’m guessing that 31 ply was beyond Stockfish’s horizon. One thing I haven’t mentioned is that the match was played at a minute per move, and I doubt that Stockfish can go 31 ply deep in a minute.

Now let’s see the tactical fireworks:

30. … Bxg5

Of course if 30. … fg? 31. Qxe6+ followed by 32. Nf7+ wins.

31. Qxg5 fg 32. f5!! …

A master stroke. The pawn can seemingly be captured in two ways, but both 32. … ef 33. Qf6 Qe7 34. Rc7+ and 32. … gf 33. Qg7+ Qe7 34. Rc7+ win for White. So Black has to leave the pawn where it is.

32. … Rg8 33. Qh6! …

The pawn on f5 is still taboo because of 34. Qh7+.

33. … Qf7 34. f6! …

Position after 34. f6. Black to move.

Position after 34. f6. Black to move.

FEN: 6r1/1b1k1q2/1p2pPpQ/pP1pP3/P2P3P/4K3/2R5/8 b – – 0 34

With 34. f6, the position abruptly changes from tactical back to strategic. Rybka sees both 34. fe+ and 34. f6 as being dead equal. Many of us would be tempted to open the position up with 34. fe+, hoping to get at Black’s king. But AlphaZero realizes that keeping the position closed is actually the right strategy. This is the way to ensure that Black’s bishop is completely locked in and only worth a pawn. (In fact, in many lines Black would be glad to trade it for White’s passed f-pawn.) It’s worth noting that Black is in near zugzwang; the rook and queen must not move, the bishop is extremely limited and the king can only shuffle between d7, d8, and e8.

Of course, AlphaZero doesn’t reason that way. It just saw that random games with the move 34. f6 have better results for White than random games with the move 34. fe+.

Continuing from the diagram:

34. … Kf8 35. Kd2?! …

Yes, that’s a question mark that I have attached to AlphaZero’s move! In this case it’s only a pro forma question mark. With 35. … Ke2 AlphaZero could have avoided the queen trade that Stockfish forces on move 38. However, because of its holistic reasoning, Stockfish doesn’t mind trading queens because it sees that White’s winning percentage is still good.

On the other hand, the exclamation point is because AlphaZero comes up with another out-of-this-world maneuver. It sacrifices a piece and then it retreats with its king and retreats with its rook. Why? In retrospect it’s simple. AlphaZero wants to play its queen to e3 (or maybe c1) and needs the king out of the way. It also wants the king to be off the third rank so the queen can move back and forth. On the second rank, though, the king is in the way of the rook, so AlphaZero moves the rook to the first rank on the next move.

In retrospect I understand it, but I would never in a million years think of this maneuver myself.

35. … Kd7 36. Rc1 Kd8 37. Qe3 Qf8

Another option was 37. … Qd7, but I don’t think that it changes the position in any fundamental way. I would be tempted to play 37. … Rh8, but 38. Qa3 Rxh4 39. Qd6+ is too strong.

38. Qc3 Qb4 39. Qxb4 ab 40. Rg1 b3 41. Kc3 Bc8 42. Kxb3 …

Position after 42. Kxb3. Black to move.

Position after 42. Kxb3. Black to move.

FEN: 2bk2r1/8/1p2pPp1/1P1pP3/P2P3P/1K6/8/6R1 b – – 0 42

Twelve moves after sacrificing the piece, White has gotten back a second pawn for it. But if we count Black’s bishop as only a pawn, White is now a pawn ahead. Anyway, even conventional chess engines can now see that Black is in big trouble. His pieces cannot cope with the passed pawns on both sides — the b-pawn, the f-pawn, and potentially the h-pawn.

42. … Bd7 43. Kb4 Be8 44. Ra1 Kc7 45. a5 Bd7 46. ab+ Kxb6 47. Ra6+ Kb7 48. Kc5 Rd8

You might wonder what happens if Stockfish tries to play more actively with 48. … Rh8. The answer is that it’s just too slow: 49. Ra2 Rxh4 50. b6 Be8 51. Ra7+ Kb8 52. f7 Bxf7 (Black’s bishop is finally forced to give itself up for a pawn, as I said many moves ago) 53. Rxf7 Rh1 54. Kd6 and the king gobbles up all of Black’s pawns. One of the many astonishing things about AlphaZero’s strategy is that White’s h-pawn looks so weak, yet Black never had a chance to take advantage of it.

The rest of the game doesn’t need any comment.

49. Ra2 Rc8+ 50. Kd6 Be8 51. Ke7 g5 52. hg Black resigns.

My friend, chess master Gjon Feinstein, said that what really impressed him was the conceptual nature of AlphaZero’s play. There were so many remarkable concepts in this game: the king march to e3, the queen maneuvers like Qd1-g1-g4 and Qh6-e3-a3-d6 (threatened) and the calm retreats 35. Kd2 and 36. Rc1 and of course the spectacular piece sacrifice 30. Bxg6 leading to a near-zugzwang. Though we humans will never learn to think the way that AlphaZero does, we can still learn that the game of chess is even richer than we thought it was.

{ 9 comments… read them below or add one }

This is a very good post, Dana. Based on the above, I can summarize my understanding of AlphaZero as below:

Alpha Zero uses a Policy Chain nueral network to shortlist the most promising positions that need to be analysed. In this match, the developers mentioned that it analyzed 80,000 moves per minute, while stockfish analysed 1000 times more positions

Alpha Zero also has another Value Chain neural net, that can judge a position as won/lost/drawn just by “looking” at it and without it playing thru to the end.

In short, the Policy Network acts like a Opening Book of traditional engines, and the Value Network acts like the endgame Table Base of traditional engines.

But Alpha Zero needs something to connect the opening to the ending, and for this it uses Monte Carlo analysis, where it randomly plays many games for each position, till it obtains a clear value network judgement.

In this way, Alpha Zero is able to “see” 20-30 moves ahead for each position it analyzes, whereas traditional engines can only analyze 9-15 moves ahead for each position they analyze.

In other words Narrow-but-Deep analysis as opposed to Broad-but-Shallow analysis.

The most mind boggling thing for me here is the Monte Carlo simulation analysis, which means AlphaZero might be playing many Sample Games (say 100 for each position) when it is analyzing its next move. So 80,000*100 = 8 million chess games every minute or approximately, 1 full chess game, every 100 Microseconds. Mind-boggling!

I think that’s basically right. I think that when they say it “only” looks at 80,000 positions a second, they’re kind of soft-pedaling what it really does.

Dana as usual you write an interesting analysis, but as a very much average chess player I am inclined to say “so what”. I suspect (and you with your numbers abilities could verify one way or another) that the majority of chess players are more interested in how effective they can calculate moves rather than some chess playing machine. All sorts of machines can do so many many things better and more efficiently than humans.

If we could all play like Alpha Zero or even Stockfish would it feel better than seeing our opponent’s face turn red when he makes a blunder, or when we outplayed a long time opponent finally beating him, and having him say “man, you really outplayed me this time. Good for you.” ?

Chess playing machines or not, I still enjoy your blog.

Rob

I was surprised at how much passion I saw on Facebook about AlphaZero, because after all it was just one computer program beating another. But some people really do care about this!

Are you sure AlphaZero plays many random games out to the end? That is how Monte Carlo Tree Search Go bots used to work before AlphaGo, but I was under the impression AlphaZero doesn’t calculate all the way to the end of the game any more. Doesn’t it explore a range of plausible moves to a certain depth, and then evaluate the resulting position using its network?

I’m not completely sure. I was basing this description on what I understood about AlphaGo after writing an article about it last year. I assumed that AlphaZero would work the same way. It doesn’t make sense to me to abandon this play-it-all-the-way-out approach, given that it worked with the game of go. But I’m certainly willing to be corrected on this point if somebody has more recent knowledge.

Where the first AlphaGo indeed did play out the entire game – this is something that makes more sense in Go than in Chess – The next instance of AlphaGo, namely AlphaGoZero did not. Instead AlphaGoZero has the ability to judge positions (the value network) and does not need to play out the entire game. AlphaZero again is based on AlphaGoZero and also has a value network.

Beside the value network, the neural net has another output: The policy network that decide what moves are most likely good. The “random playouts” AlphaZero uses are not completely random, they are based on the scores given by the policy network. Over a single playout game it does indeed play randomly, if the policy net say “this move is 1% good”, it might just randomly play that 1% move. But over the 80.000 moves it played, 79.000 of them will be the move a grandmaster (well, AlphaZero) would prefer. So instead of writing “If we see that White usually wins the position if it’s played by weaker players”, it actually is “White usually wins the position if it’s played by grandmasters”.

To also respond to the 80.000 positions per seconds it evaluate i read in another reply: I do believe it actually only analyses 80.000 positions per seconds and you cannot multiply that by 100 moves. A neural network is really one big whooping mathematical formula. In goes the chess board, out comes a value (win chance) and policy (best move probability). This is a little different from a chess book that has exact information, but nevertheless the policy network can still give you an accurate list of possible moves. How accurate really depend on how large the neural net is (the larger the formula, the more details it can contain), as well as how well it is trained (during training, the network is updated to reflect the outcome of training games more accurately).

I believe the whole ability of AlphaZero to “read 20-30 moves ahead” stems from the value network that evaluate positions where certain pieces are completely blocked and unable to come into play a inferior, thus AlphaGo is willing to sacrifice pieces to gain positional advantages. It does not actually read 20-30 moves ahead, and certainly does not read every possible move 20-30 moves down, even if some of the random playouts it did do reach that many move deep.

Thanks for the in-depth explanation! In particular I appreciate the explanation of the terms “value network” and “policy network,” which I didn’t fully understand from the AlphaZero and AlphaGo papers.

The other important thing you mentioned is that the games aren’t really “random.” They are and they aren’t, depending on what one means by the word “random.” In layman’s speech “random” would mean all moves are given equal weight, and this is not what AlphaZero does. Instead, as you said, stronger moves (according to the policy network) are given higher weights, and then the actual move is chosen randomly with respect to those weights (or “probability distribution” to use a more technical term).

I decided in my original post not to go into this aspect because I thought readers might find the two notions of “random” to be confusing. However, it is of course critically important because this is how the machine learns. And one of the remarkable things is that it is able to bootstrap itself up. It starts from total ignorance and playing total random moves. By observing enough random games played at a Elo strength of, say, 1000, it is able to teach itself a value network whose strength is 1200. then by observing random games played by this 1200 network, it improves the value network to 1400 strength. And so on, all the way up to grandmaster and beyond. This is truly amazing. And because the ability to improve is *built in* to the system, a program like Stockfish will never be able to keep up.

A couple of minor corrections. I think 4. e6 should be 4. e5. And 34… Kf8 should be 34… Ke8. But great post.